About Spatial Camera Tracker

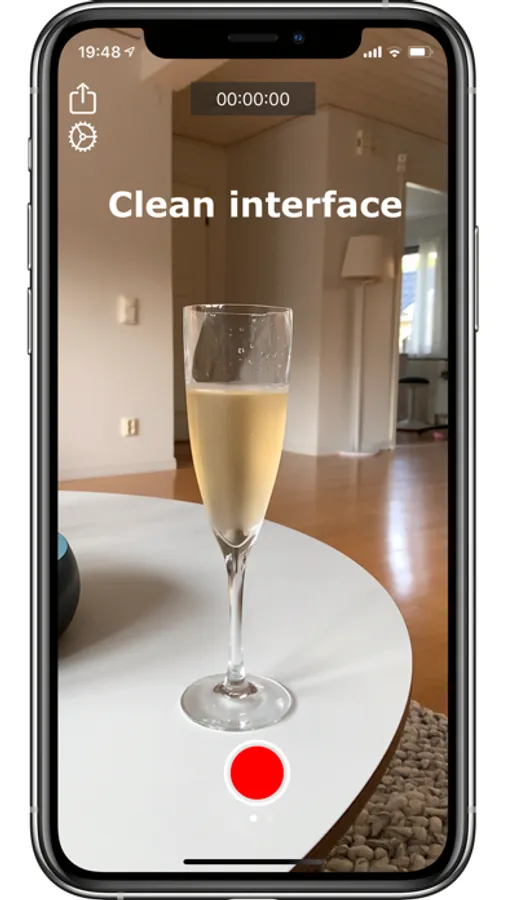

Are you a movie maker enthusiast? Do you want to add CG content to your movies and want an easy way to synchronise real and virtual cameras? Or are you looking at creating Mixed Reality footage? Then Spatial Camera Tracker is for you!

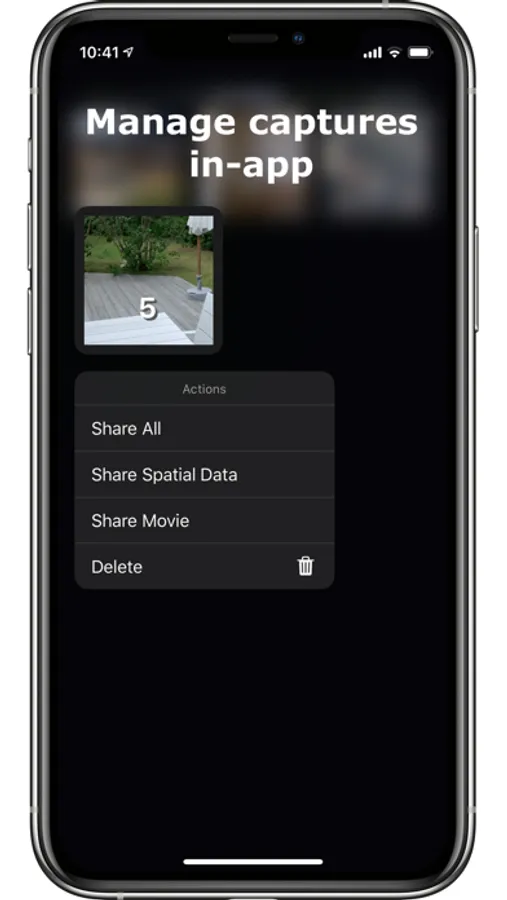

Spatial Camera Tracker is a virtual production tool allowing you to capture HD video together with positional tracking information of both the camera and the person being filmed! No external sensors needed which means you can record anywhere and over huge distances using only your iPhone or iPad! Please note that not all features are available on all devices. See below for details.

Import the tracking information into Unreal or Unity and drive virtual cameras, replaying the original camera movements, to capture CG footage and combine with your real life footage using standard video editing software or in-engine techniques. As the information is recorded you can iterate on you virtual scenes until you are happy with the result.

Spatial Camera Tracker contains 3 capture modes:

Camera World Tracking - 6 DOF tracking of the phone's location and rotation in 3D space. Use this mode to align CG greenscreen footage with camera recorded footage. Available on any ARKit supported device.

Green Screen Recording - Same as Camera World Tracking but with a green screen effect applied segmenting out any humans being recorded. Use this mode to align camera recorded footage with CG rendered scenes. Requires a device with an A12 chip or higher!

Body Tracking - Full human skeleton joint tracking of a single person being filmed. Use this mode to anchor CG effects to the person being filmed. Requires a device with an A12 chip or higher!

LiDAR support - Spatial Camera Tracker also supports capturing the surrounding environment geometry using the LiDAR sensor on the latest iPad Pro! Use this mesh to line up CG objects and effects with the real world recorded footage or simply to help recreate a virtual version of the real world.

Plugins - To make use of the recorded spatial data, use one of the plugins hosted on Github

Unreal: https://github.com/Kodholmen/SCT-Unreal

Unity: https://github.com/Kodholmen/SCT-Unity

Spatial Camera Tracker is a virtual production tool allowing you to capture HD video together with positional tracking information of both the camera and the person being filmed! No external sensors needed which means you can record anywhere and over huge distances using only your iPhone or iPad! Please note that not all features are available on all devices. See below for details.

Import the tracking information into Unreal or Unity and drive virtual cameras, replaying the original camera movements, to capture CG footage and combine with your real life footage using standard video editing software or in-engine techniques. As the information is recorded you can iterate on you virtual scenes until you are happy with the result.

Spatial Camera Tracker contains 3 capture modes:

Camera World Tracking - 6 DOF tracking of the phone's location and rotation in 3D space. Use this mode to align CG greenscreen footage with camera recorded footage. Available on any ARKit supported device.

Green Screen Recording - Same as Camera World Tracking but with a green screen effect applied segmenting out any humans being recorded. Use this mode to align camera recorded footage with CG rendered scenes. Requires a device with an A12 chip or higher!

Body Tracking - Full human skeleton joint tracking of a single person being filmed. Use this mode to anchor CG effects to the person being filmed. Requires a device with an A12 chip or higher!

LiDAR support - Spatial Camera Tracker also supports capturing the surrounding environment geometry using the LiDAR sensor on the latest iPad Pro! Use this mesh to line up CG objects and effects with the real world recorded footage or simply to help recreate a virtual version of the real world.

Plugins - To make use of the recorded spatial data, use one of the plugins hosted on Github

Unreal: https://github.com/Kodholmen/SCT-Unreal

Unity: https://github.com/Kodholmen/SCT-Unity