About P.A.R.T.

Welcome to our application for Portable Automated Rapid Testing (PART), a program designed to assess auditory processing abilities across a wide range of tasks. We are grateful to the National Institutes of Health and the Department of Veterans Affairs for supporting the development of this application and the many studies on which it is based.

PART was designed to allow users to perform high-quality assessments of auditory processing abilities in a wide variety of settings. We believe that the nervous system processes sound in many complex ways and that hearing health is best described in terms of how well a listener is able to make use of the multiple dimensions on which sounds can vary.

This application includes a wide variety of auditory tasks, all of which have been shown to have utility in assessing auditory function in the laboratory. The set of tasks were chosen by the PART development team, which is led by Frederick J. Gallun at the National Center for Rehabilitative Auditory Research, David Eddins at the University of South Florida, and Aaron Seitz at the University of California Riverside (UCR). The program is a production of the UCR Brain Game Center, a research unit focused on brain fitness methods and applications.

Assessments and Batteries:

This application is continually being upgraded and at this time already includes assessment tools that can be used to measure the following abilities:

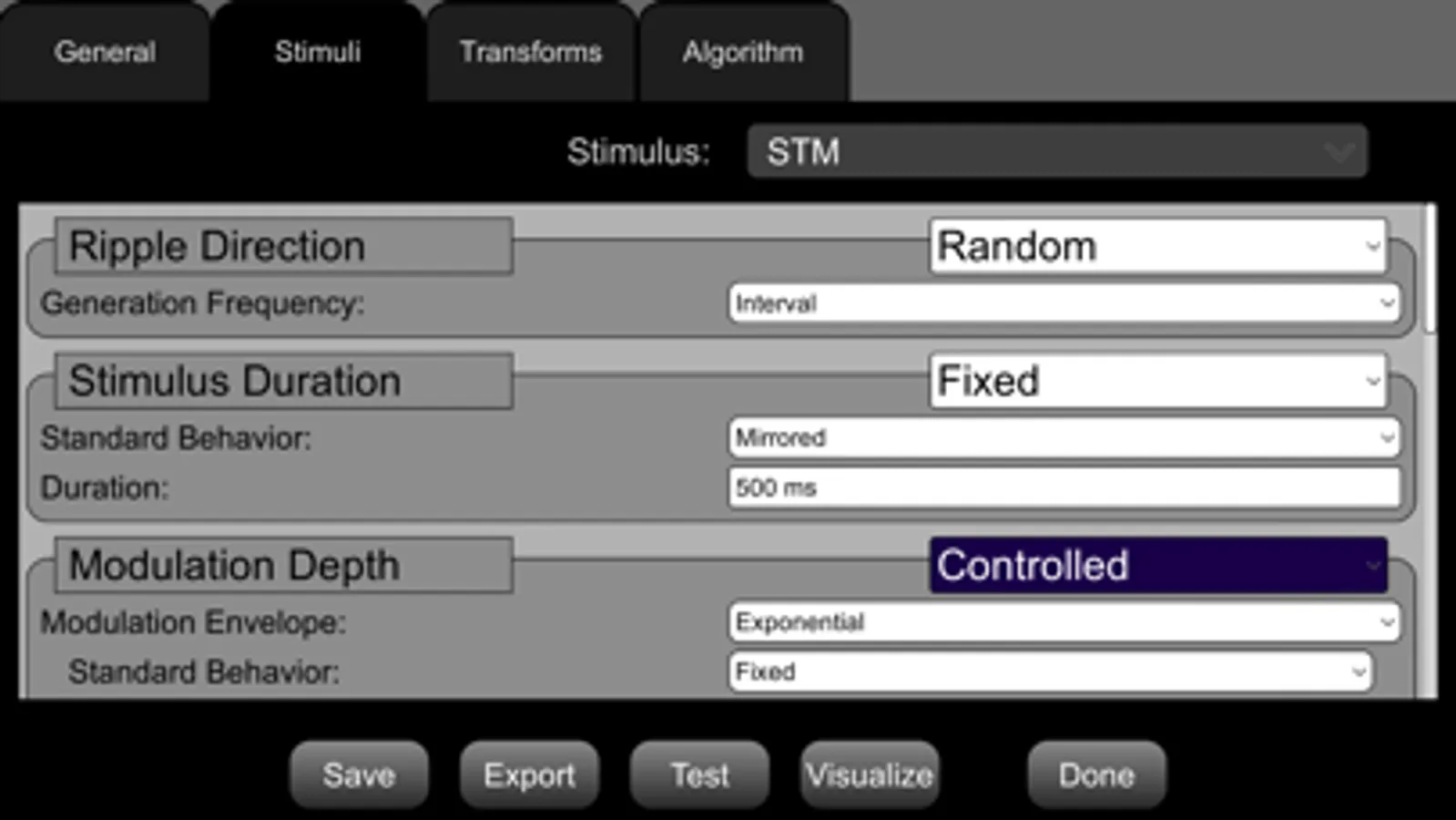

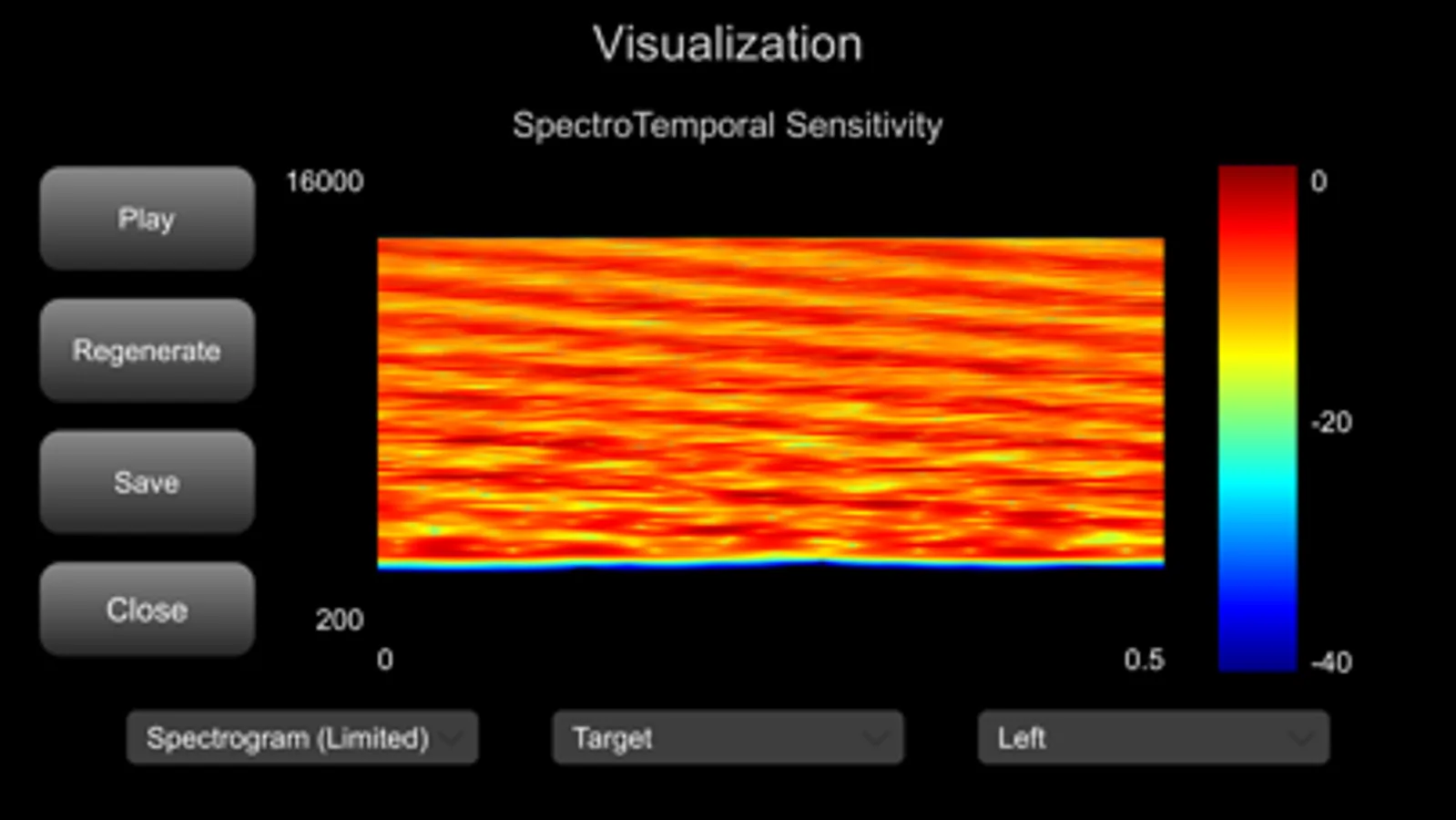

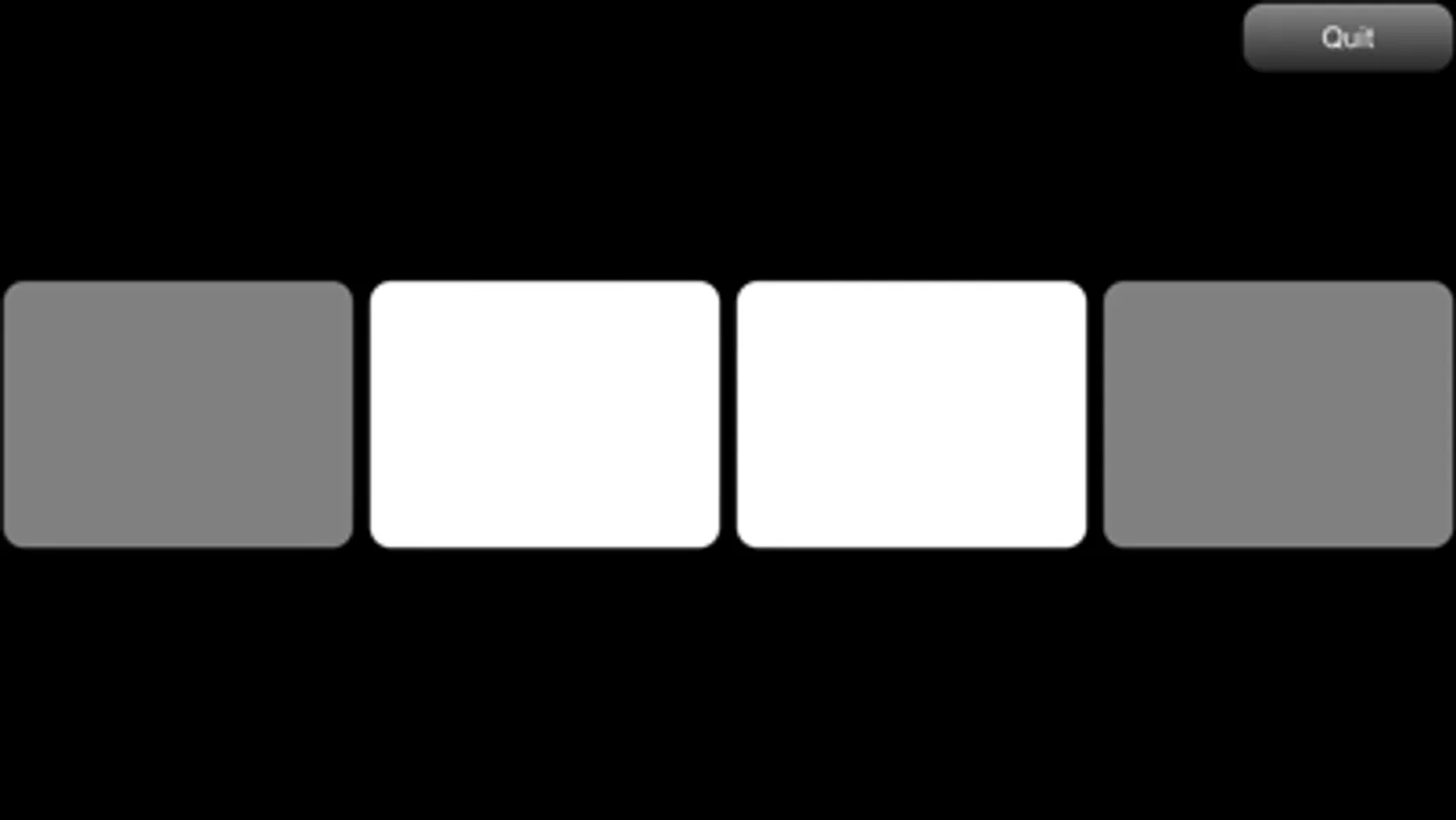

Temporal Sensitivity, Spectral Sensitivity, Spectrotemporal Sensitivity, Binaural Sensitivity, Spatial Release from Speech on Speech Masking, Informational Masking (Multiple Burst Paradigm)

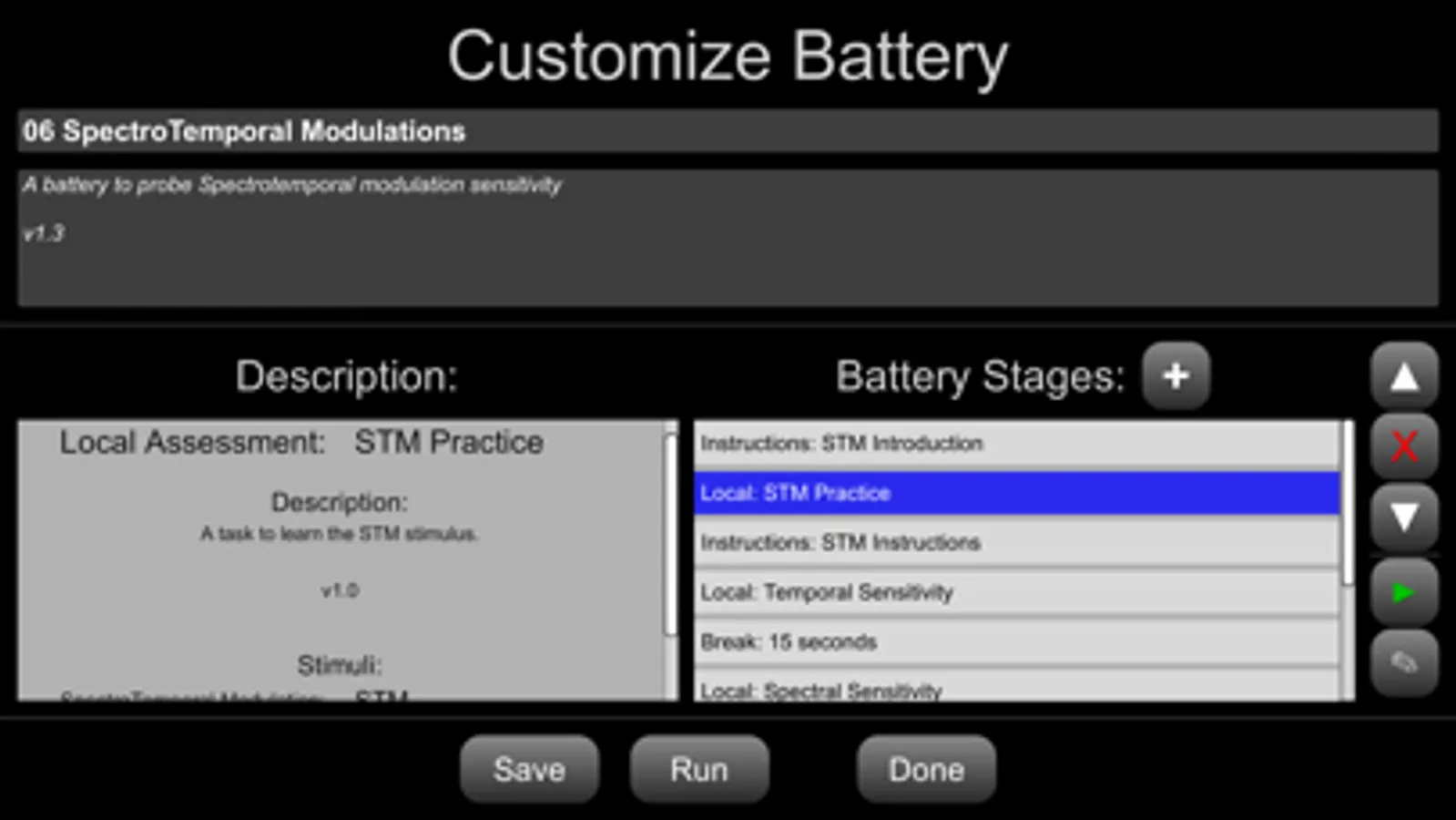

Default Batteries are included that present limited instructions and examples and include a number of the assessments described above. For further information on the default batteries, the availability of additional custom batteries, Matlab scripts for analyzing the user data, and to have questions answered on the functionality of PART, including calibration, please email fgallun@gmail.com to be added to iPUG, the iPad Psychophysics Users Group.

See https://bgc.ucr.edu/ for more information and selected references.

Acknowledgments

This work was supported by NIH/NIDCD R01 0015051. The application represents the individual work of the authors and creators and should in no way be considered to be approved by or represent official policy of the United States Government.

PART was designed to allow users to perform high-quality assessments of auditory processing abilities in a wide variety of settings. We believe that the nervous system processes sound in many complex ways and that hearing health is best described in terms of how well a listener is able to make use of the multiple dimensions on which sounds can vary.

This application includes a wide variety of auditory tasks, all of which have been shown to have utility in assessing auditory function in the laboratory. The set of tasks were chosen by the PART development team, which is led by Frederick J. Gallun at the National Center for Rehabilitative Auditory Research, David Eddins at the University of South Florida, and Aaron Seitz at the University of California Riverside (UCR). The program is a production of the UCR Brain Game Center, a research unit focused on brain fitness methods and applications.

Assessments and Batteries:

This application is continually being upgraded and at this time already includes assessment tools that can be used to measure the following abilities:

Temporal Sensitivity, Spectral Sensitivity, Spectrotemporal Sensitivity, Binaural Sensitivity, Spatial Release from Speech on Speech Masking, Informational Masking (Multiple Burst Paradigm)

Default Batteries are included that present limited instructions and examples and include a number of the assessments described above. For further information on the default batteries, the availability of additional custom batteries, Matlab scripts for analyzing the user data, and to have questions answered on the functionality of PART, including calibration, please email fgallun@gmail.com to be added to iPUG, the iPad Psychophysics Users Group.

See https://bgc.ucr.edu/ for more information and selected references.

Acknowledgments

This work was supported by NIH/NIDCD R01 0015051. The application represents the individual work of the authors and creators and should in no way be considered to be approved by or represent official policy of the United States Government.